Join us on Monday, November 16th at 4:30pm in the Strange Lounge of Main Hall for the Strange Philosophy Thing. All are welcome to this informal conversation, and to refreshments.

I want to center the discussion on Monday around two questions.

(Q1) Do intuitions provide us with reasons to believe?

(Q2) Does studying philosophy improve student’s intuitions, or their access to their intuitions, does it simply acquaint one with received interpretations of various puzzle cases, thought experiments, and the like, or does it do something else entirely?

Consider a problem case or thought experiment you’ve encountered in a class, such as Gettier’s famous thought experiment, which is meant to yield a counterexample to the traditional analysis of knowledge as justified true belief. Suppose an individual, Smith, has just applied to a job, and has just acquired good evidence, and has formed the belief, that another applicant

(1) Jones will get the job and has 10 coins in his pocket.

Suppose Smith just counted the coins in Jones’s pocket, and the CEO just told Smith that Jones would get the job. Suppose Smith then infers that

(2) The person who will get the job has 10 coins in their pocket.

Now suppose, despite what the CEO thought, that it is in fact Smith who will get the job, and, while Smith is unaware of this, she happens to also have exactly 10 coins in her pocket. Gettier then notes that (2) is true, and that Smith is justified in her belief in it. But, he thinks, something has gone wrong, and Smith does not know that the person who gets the job has 10 coins in their pocket.

It is this very last move, in the last sentence, in which Gettier is appealing to our intuitions. He is hoping we have the same intuition, that Smith does not know that the person who gets the job has 10 coins in their pocket. It is only if we have this intuition that Gettier’s case will act for us as a counterexample to the traditional analysis of knowledge as justified true belief, as a case in which someone is justified in believing something that is true, but they nevertheless fail to know it.

Do our intuitions provide evidence for our conclusions about cases like these? Even if I agree with Gettier, can my intuition that Smith does not know act as evidence for me in my belief that Smith does not know? Challenges have been brought against the idea that intuitions can act as evidence in this way. A potential a posterori challenge comes from experimental philosophy, which has had mixed results concerning the universality of intuitions in various sorts of cases. Early experiments (e.g., Weinberg et al. 2001 and Machery et al. 2004) concerning epistemic intuitions, like the one at issue in Gettier’s case, and semantic intuitions, suggested there are cross-cultural differences which threaten to undermine any claim they have to being evidence. Later more nuanced studies (e.g., Kim and Yuon 2015) have suggested that perhaps there isn’t as much to worry about in the case of epistemic intuitions. But questions linger concerning the universality of both epistemic and semantic intuitions and other sorts of intuitions.

A priori challenges to the evidential status of intuitions have also been developed. Earlenbaugh and Molyneux (2009), for example, argue that intuitions can’t be used as evidence because another’s intuition that P can’t ever act as evidence for me to believe that P, as can another’s perceptions. If another perceives that P though I do not (perhaps I am looking the other way), I will take their perception, via their testimony, as evidence as a matter of course. Intuitions never work like this, Earlenbaugh and Molyneux claim.

Another question discussed in a recent article is whether education in philosophy trains students to have better (access to) their intuitions (let’s assume they can act as evidence) about various cases, or whether something else is happening, like that it is merely relaying to students the received interpretations of the various cases. When you first encounter the Gettier case, is the instructor enabling you to become aware of your own intuition about the case? Or is the instructor instead simply reporting what the philosophical community’s general consensus is about the case? Or is something else going on? Whichever way, does it matter? Do some answers to these questions, for example, threaten to undermine the legitimacy of philosophy as a discipline? See here for a brief overview of the new article from the Daily Nous, as well as a description of even more possibilities of what could be going on than I mentioned above.

I look forward to discussing these issues with you on Monday!

Spider Minds, Extended Minds, and the Web

Join us tomorrow afternoon at 4:30 in the Strange Lounge of Main Hall for the Strange Philosophy Thing. All are welcome to this informal conversation, and to our refreshments!

According to the extended mind hypothesis, sometimes objects outside of an individual’s body can come to constitute part of that individual’s mind. This view, originally articulated in a 1998 paper by philosophers Andy Clark and David Chalmers, has primarily been considered as a hypothesis about human thought. However, some exciting recent research within evolutionary biology suggests that it might be even more applicable to invertebrate minds.

As discussed in this fascinating article, evolutionary biologists Hilton Japyassú (Federal University of Bahia) and Kevin Laland (University of Saint Andrews) have, “argued in a review paper, published in the journal Animal Cognition, that a spider’s web is at least an adjustable part of its sensory apparatus, and at most an extension of the spider’s cognitive system.” Through a series of experiments, Japyassú has demonstrated that “changes in the spider’s cognitive state will alter the web, and changes in the web will likewise ripple into the spider’s cognitive state,” suggesting that the two are working together as a larger cognitive system. In a series of studies, for example, Japyassú showed that web-building spiders, which “are near blind, and…interact with the world almost solely through vibrations” are “put on high alert” and “rushed toward prey more quickly” when strands of their web were artificially tightened by experimenters, leading these strands to transmit vibrations more forcefully. And, as the article notes, “the same sort of effect works in the opposite direction, too. Let the orb spider Octonoba sybotides go hungry, changing its internal state, and it will tighten its radial threads so it can tune in to even small prey hitting the web. ‘She tenses the threads of the web so that she can filter information that is coming to her brain,’ Japyassú said. ‘This is almost the same thing as if she was filtering things in her own brain.’”

The fascinating article includes a number of other neat examples, involving spiders, octopuses, and grasshoppers. In fact, as the article discusses, it may turn out that extended cognition strategies are favored by natural selection for small organisms, and therefore that the extended mind hypothesis more accurately describes invertebrates than any other group of animals. Haller’s Rule, first proposed by the Swiss naturalist after which it is names in 1762, is a general rule of biology, which holds across the animal kingdom: “smaller creatures almost always devote a larger portion of their body weight to their brains, which require more calories to fuel than other types of tissue.” Work by Japyassú and others, though, is beginning to suggest “that spiders outsource problem solving to their webs as an end run around Haller’s rule”. If this is explained by evolutionary considerations related to Haller’s Rule, then we would expect to see responsive relationships between other small animals and components of their environments as well.

Opponents of Japyassú object to his definition of cognition in terms of acquiring, manipulating and storing information, suggesting that this misses an important distinction between information and knowledge. Cognition, they contend, involves interpreting information “into some sort of abstract, meaningful representation of the world, which the web — or a tray of Scrabble tiles — can’t quite manage by itself”. Others contend that Japyassú’s research misrepresents the amount of embodied, non-extended cognition that spiders are capable of. For example, “Fiona Cross and Robert Jackson, both of the University of Canterbury in New Zealand…study jumping spiders, which don’t have their own webs but will sometimes vibrate an existing web, luring another spider out to attack. Their work suggests that jumping spiders do appear to hold on to mental representations when it comes to planning routes and hunting specific prey. The spiders even seem to differentiate among ‘one,’ ‘two’ and ’many’ when confronted with a quantity of prey items that conflicts with the number they initially saw.”

I look forward to talking with you about all this—about animal minds, extended minds, and the way our environments might enter into thought—tomorrow at the Strange Thing!

A Fun Argument that You Should Have Never Been Born

Life is so terrible, it would have been better to not have been born. Who is so lucky? Not one in a hundred thousand! –An old Jewish joke

A few weeks ago, Prof Phelan lead us in a discussion that began with the intuition that morality takes into account not just possible harms to actual people, but possible harms to possible people.

This week, we’ll explore a different puzzle beginning from the same place. And again, we’ll have to say some odd things in order to compare the life that a merely possible person would live if they came into existence with the state of affairs in which that person does not come into existence.

David Benatar argues for “anti-natalism,” the position that it’s always wrong to bring people into existence. He tries to show this by showing that coming into existence is always a harm. The basic argument is pretty simple, but we’ll discuss some of the complications on Monday.

The first step is to show that sometimes coming into existence can be a harm. Benatar suggests that you are harmed if you are put into a state that is bad for you while the alternative would not have been bad for you. Of course, not existing is not bad for anyone, because there’s no one for it to be bad for. So, if someone’s life is not worth living (which is bad for them), then they are harmed because not existing would not have been bad for them.

The second step is to show that not coming into existence is never a harm. When we talk about existing people, we say that missing out on good things, such as pleasure, is bad for them. Or that getting the good thing is better for them. But for “someone” who never comes into existence, there’s no one that it’s bad or worse for (nor is there someone who “misses out” on the good thing).

So, prospectively, before “someone” is brought into existence:

With respect to the pains, harms, and other bad things that would be in the life lived, existing is worse than not existing.

With respect to the pleasures and other good things that would be in the life lived, not existing is not worse than existing.

So, if someone is harmed when they are put into a state that is worse for them than the alternative state, coming into existence always is a harm.

That also means that, for all of us, no matter how good our lives may be, we were all harmed by being brought into existence. (This is perfectly compatible with saying ceasing to exist would be a harm or bad for us.)

This conclusion likely strikes you as incorrect, as it does many people.

The challenge is to identify where the reasoning goes wrong (in this and in Benatar’s parallel arguments that I’ll have on hand), so we can see what justifies rejecting the conclusion. And if we can’t find where it goes wrong, perhaps we’re not justified in rejecting it.

Don’t be a stranger (in the sense of not showing up–please be a stranger in the sense of coming to Strange Philosophy Thing!)Join us in the Strange Lounge of Main Hall on Monday, 2/13.

The Ethicist, New York Times

Please join us tomorrow, Monday Feb 6 at 4:30pm in the Strange Lounge, where we will discuss the New York Times’ write-in ethical advice column “The Ethicist” by Kwame Anthony Appiah. [Did you know that students and faculty can access a free online membership to the New York Times, through Lawrence’s Mudd Library? If interested, follow this link.] We will discuss a few of the write-ins, and Appiah’s advice. You do not need an online subscription for this meeting, instead, I’ve included four write-ins below that we’ll start with (think about them and I’ll bring Appiah’s response and advice on Monday)—and if anyone wants to excerpt other write-ins for this meeting you are welcome to:

- November 23, 2021:When my father died, I inherited a large trust fund and sole ownership of a family business. I was young and woefully unprepared, so I put my inheritance on the back burner and lived my life as if I was financially “normal.” However, since the pandemic, my portfolio has hit a new high. I am utterly distraught. I feel that I should have never gotten so wealthy when people are suffering so much. I’ve been seriously considering giving a large portion away, but the more I talk to people, the more I realize that to give away large sums of money responsibly and ethically turns my life into a job that I never wanted. I don’t want my father’s money to become my life, my career or the most significant thing about me, even though I know that I benefit from it. I have privileges with it, it gives me options and frankly I could not afford to live in a big city without it. My questions are these: How much money is it ethical to keep, and how much would it be ethical to give away? What is the best way to decide who should receive the money? And how much time and responsibility and rewriting of my life do I owe this gift that often feels like a burden? Name Withheld

- November 23, 2021: In an effort to provide for the long-term financial stability of her children, my grandmother bought a large amount of stock in a fossil-fuel company, which she left to my father. I find it heartbreaking that the investment she made and that my father has maintained to provide safety and security is one of the things that is actively moving the planet toward terrible destruction. My father is elderly and would not consider divesting from this company. This stock will be a majority of his bequest to my siblings and me. I hope my father will live for many more years, but when that stock — and the associated wealth — come to me, what is the ethical thing for me to do with the money? I feel guilt and disgust at the prospect of profiting off the suffering of so many. Name Withheld

- October 4, 2022: My mother has an undiagnosed mental illness that makes her incapable of accepting reality and that has caused her to be emotionally abusive my entire life. My sister and I have maintained a relationship with her, but with strict boundaries and limited visits. She lives alone in a home she inherited, which is falling apart. She is a hoarder and won’t let in repair people or anyone else for that matter. She is 69 years old, hasn’t seen a doctor or dentist in decades. Her car stopped running long ago, and she walks a mile or two to the supermarket, even in winter. She lives off a meager Social Security income. Her pets are not properly cared for, yet she won’t let me adopt them. What options do my sister and I have as she ages and her living situation deteriorates further? And what obligations do we have toward her? She will never admit anything is wrong with her, and neither of us is willing to let her live with us. She would ruin our lives and our families’ lives. This situation is like a dark cloud looming over our heads. My sister and I are the only ones in her life who can help her, but neither of us can afford to support her financially or otherwise. Name Withheld

- October 4, 2022: I have a middle-aged sister who has struggled with a personality disorder since adolescence. It has been challenging for her to hold a job. She has relied on my parents for assistance to raise her son, who is now grown, and to cover all of her living expenses, even as they have moved into their retirement years and live on a fixed income. I told my parents that I do not intend to support her when they are no longer able to or are no longer living, because she refuses to take any responsibility for herself or to comply with medical or mental health care plans. Now my parents are suggesting that I help with some of their expenses. But they would not need assistance if they were not dedicating a significant portion of their income to my sister’s support.How do I determine what my obligations here are? I have adolescent children to whom I also have obligations, like paying for college. Name Withheld

Crows, Ravens, Persons

Join us on Monday, January 30th in the Strange Lounge of Main Hall for the Strange Philosophy Thing. All are welcome to this informal conversation, and to refreshments.

I ran across this article recently. In it, the author Diana Kwon relates research done by Diana Liao and others in Andreas Nieder’s animal physiology lab at the University of Tuebingen, in which they provide evidence that crows can understand recursion. Kwon also discusses similar research done on monkeys and humans. Recursion is a distinguishing feature of human language, as in, “The horse raced past the barn built by my uncle is a Palomino”. This made me think about Steven Pinker’s attack, in his 1994 book The Language Instinct, on studies through the second half of the 20th century which sought to show that chimpanzees and bonobos were capable of the sort of “open” languages that human beings are capable of (as opposed to “closed” fixed collections of distinct calls). One of Pinker’s critcisms involved highlighting the fact that the English directions to which the bonobos of one study responded were quite simple; none were recursive. Kwon is clear that the results of Liao et al.’s study does not mean that crows are capable of language. (Indeed, this in itself raises the question of why crows understand recursion if not for applications in communication.) But it is nonetheless intriguing if crows have this rather sophisticated cognitive ability.

Corvids have impressed us before, e.g., in their apparent ability to attribute mental states like visual perception to other members of the same species. In another study (Bugnyar et al. 2015), researchers showed that, ravens, which are close relatives of crows, behaved differently if a known peephole in their habitat was open or closed. The ravens were not able to see whether or not there is another raven on the other side of the peephole when it is open. But they would nonetheless reliably re-hide a treat if the peephole was open when they first hid it, and they would not do so if the peephole was closed at first-hiding. The authors of this study conclude that these ravens must understand that other ravens might see them. This sophisticated and generalized reasoning, which involves the attribution of mental states to others that might be present, is another impressive ability not shared by many in the animal kingdom.

All this reminded me potential conditions a thing must meet to be a person. Daniel Dennett discusses several. He says,

“The first and most obvious theme is that persons are rational beings. . . . The second theme is that persons are beings to which states of consciousness are attributed, or to which psychological or mental Intentional predicates, are ascribed. . . . The third theme is that whether something counts as a person depends in some way on an attitude taken toward it, a stance adopted with respect to it. . . . The fourth theme is that the object toward which this personal stance is taken must be capable of reciprocating in some way. . . . This reciprocity has sometimes been rather uninformatively expressed by the slogan: to be a person is to treat others as persons, and with this expression has often gone the claim that treating another as a person is treating him morally. . . . The fifth theme is that persons must be capable of verbal communication. . . . The sixth theme is that persons are distinguishable from other entities by being conscious in some special way: there is a way in which we are conscious in which no other species is conscious. Sometimes this is identified as self-consciousness of one sort or another.” (Quoted from “Conditions of Personhood”, in Brainstorms 1976, 269-70.)

While crows may not have an open language like humans, and are perhaps not even capable of it, a key element of such a structure, viz., recursion, may be present. So even if corvids are not capable of verbal communication, they may nonetheless go some way toward meeting Dennett’s sixth condition, insofar as they may possess a cognitive ability that is a distinctive aspect of human language. This, coupled with their apparent ability to understand that others have mental states, and reason accordingly, makes me wonder how far toward Dennett’s fourth condition corvids go as well.

I thought we could discuss questions about these and other examples of non-human animals going some way to meeting some of the conditions of personhood Dennett sets out. And I thought we could have a more general discussion about the adequacy of these conditions more generally. What is a person? What sets a person apart from non-persons? Should some of Dennett’s conditions of personhood be discarded? Are there any other conditions you think something needs to meet to qualify as a person? Can we ever hope to find a set of conditions that are jointly sufficient for personhood, i.e., some set of conditions that, if met, guarantee that a thing is a person?

I look forward to discussing these and other questions with you on Monday!

Prof. Dixon

The Ethical Weight of Possible People

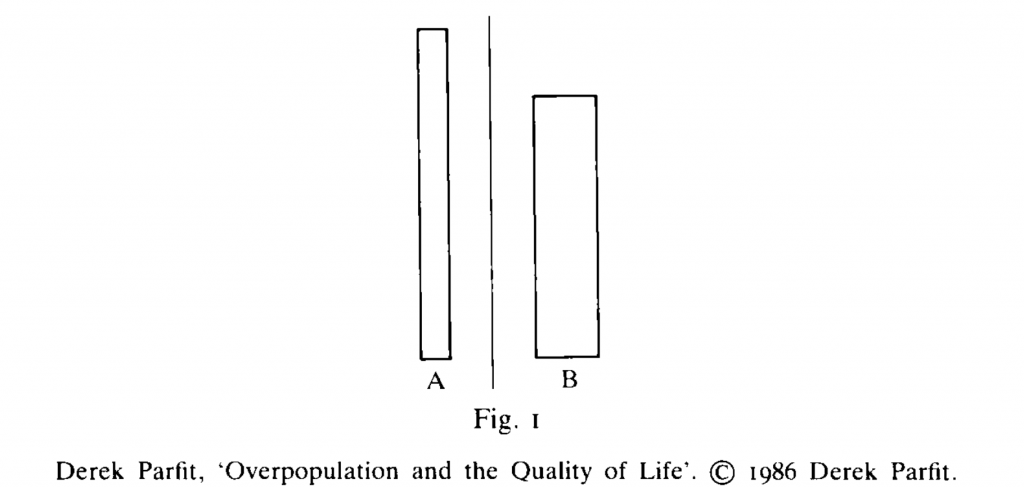

Suppose that you could make the world a better place, without too large a cost to yourself and without violating any deontological principles (for example, continuing to respect people as ends). Many people would suppose that, if you could, you would have an obligation to do so. Now, consider two possible worlds, A & B:

Derek Parfit (1986) writes of these as possible outcomes for a group in “Overpopulation and the Quality of Life”:

Consider the outcomes that might be produced, in some part of the world, by two rates of population growth. Suppose that, if there is faster growth, there would later be more people, who would all be worse off. These outcomes are shown in Fig. i . The width of the blocks shows the number of people living; the height shows how well off these people are. Compared with outcome A , outcome B would have twice as many people, who would all be worse off. To avoid irrelevant complications, I assume that in each outcome there would be no inequality: no one would be worse off than anyone else. I also assume that everyone’s life would be well worth living. There are various ways in which, because there would be twice as many people in outcome B , these people might be all worse off than the people in A . There might be worse housing, overcrowded schools, more pollution, less unspoilt countryside, fewer opportunities, and a smaller share per person of various other kinds of resources. I shall say, for short, that in B there is a lower quality of life.

Despite the fact that each individual’s quality of life is lower in B than in A, the total quality of life in B is, in some sense, greater, because more people exist in B, leading slightly less good lives-worth-living.

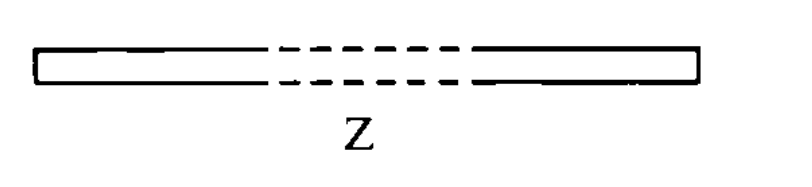

Is world B a better world than world A? If we had an opportunity to support policies that would make the world more like A, on the one hand, and more like B, on the other, would it be more appropriate to support policies that made the world more like B? And, how do our intuitions shift when we consider world Z, which Parfit describes as including “an enormous population all of whom have lives that are not much above the level where they would cease to be worth living…The people in Z never suffer; but all they have is muzak and potatoes” (148).

Leaving behind our vague obligation from above, to make the world a better place, suppose we accept some form of Utilitarianism, which claims that the right action is the one that produces the most good. Its hard to see how such a theorist avoids the conclusion that, all else being equal, World Z is preferable to World A. This is a version of what Parfit calls:

The Repugnant Conclusion: Compared with the existence of very many people— say, ten billion— all of whom have a very high quality of life, there must be some much larger number of people whose existence, if other things are equal, would be better, even though these people would have lives that are barely worth living.

We’ll discuss the ethical value of possible people and the Repugnant Conclusion at tomorrow’s Philosophy Strange Thing, at 4:30 in the Strange Lounge of Main Hall, If you’d like to learn more about these topics, here are some resources:

Parfit’s Article, “Overpopulation and the Quality of Life”.

The SEP Entry on the Repugnant Conclusion

An Economist Podcast on this material. (Skip to 11:20 for the piece on our topic.)

See you soon! Professor Phelan

Creativity: What is it? Can we improve it?

Join us for the Strange Philosophy Thing today (11/14), at 4:30 p.m. for refreshments and some fun, informal philosophy talk. Today’s topic: Creativity.

Creativity is among the most valued attributes in a person. Corporations rank creativity as the most sought after attribute in potential employees. And each of us values conversation with a witty, creative interlocuter. But what is creativity? How is it realized by the mind? How can we develop it? And what aspects of contemporary life impede it?

In a short, recent article, the philosopher of mind Peter Caruthers distinguishes two kinds of creativity: online and offline. “Online creativity,” Caruthers writes, “happens ‘in the moment,’ under time pressure.” Importantly, online creativity is the kind of creativity exhibited in a task with which one is currently engaged. It is exhibited, for example, when the person with whom you’re talking says something witty or unexpected. It is also exhibited when the jazz musician offers a brilliant and unforeseen elaboration on her bandmate’s musical motif. Offline creativity, on the other hand, “happens while one’s mind is engaged with something else”. Here is a memorable example of offline creativity from the 20th century physicist and public intellectual Carl Sagan:

I can remember one occasion, taking a shower with my wife while high, in which I had an idea on the origins and invalidities of racism in terms of Gaussian distribution curves. It was a point obvious in a way, but rarely talked about. I drew curves in soap on the shower wall and went to write the idea down. One idea led to another, and at the end of about an hour of extremely hard work I had found I had written eleven short essays on a wide range of social, political, philosophical, and human biological topics…I have used them in university commencement addresses, public lectures, and in my books.

Caruthers’ primary focus is on offline creativity of the sort Sagan describes, which, Carruthers writes, “…correlates with real-world success in most forms of art, science, and business innovation”. Caruthers claims that this sort of creativity is realized by an interplay between two distinct mental capacities for attention. One of these capacities is described by psychologists as a top-down system. Sometimes metaphorically characterized as a spotlight, top-down attention is the active, deliberate attending by an individual to her occurring mental states. Such attention is sufficient to bring mental states to consciousness. Think about deliberately attending to the distant, percussive sound of a jack hammer that you’d been successfully ignoring—suddenly the sound, which had been heretofore unconscious, rises to consciousness. The other capacity is a bottom-up process, which Caruthers characterizes as the ‘relevance system’. In addition to our own willful direction of attention, it seems that processes within our minds constantly assess the relevance of non-conscious mental states to “current goals and standing values”. This bottom-up system puts forward those mental states it deems relevant as candidates for top-down attentional focus. So, for example, you may have a standing value to be polite to people you know. Attuned to this value, the bottom-up system promotes your perceptions of familiar faces in a crowd for top-down attentional focus.

Caruthers contends that this interplay between bottom-up and top-down attention is essential to offline creativity, writing that:

If you also have a background puzzle or problem of some sort that you haven’t yet been able to solve, chances are the goal of solving it will still be accessible, and will be one of the standards against which unconsciously-activated ideas are evaluated for relevance. As a result, the solution (or at least, a candidate solution) may suddenly occur to you out of the blue. Creativity doesn’t so much result from a Muse whispering in your ear as it does from the relevance system shouting, “Hey, try this!”.

But Caruthers contends that such offline creativity is impeded by activities that focus our attention. The best route to offline creativity, he contends, is to let your mind be offline sometimes. But contemporary life—encompassing, for example, the ever-presence of smartphones and social media—makes this difficult. Caruthers characterizes the situation as follows: “Since there is no room for minds to wander if attention is dominated by attention-grabbing social-media posts, that means there is less scope for creativity, too.”

What do we think? Are there really two types of creativity? Are they “anti-correlated” as Caruthers suggests? Has creativity really been declining over recent centuries? How is creativity impeded and can we devise ways to make it flourish? Are there any trade-offs to a more creative society?

Ethics, Aesthetics, and Ontology in Video Games

Join us for the Strange Philosophy Thing tomorrow, (11/07), at 4:30 p.m. for refreshments and some fun, informal philosophy talk. We’ll be discussing three aspects of video games: the ethics of virtual actions (do ethical codes differ in games?), the aesthetics of video games (can a video game be interactive art?), and the ontology of video games (Heraclitus might have said that you can’t play the same video game twice. We’ll consider the identity conditions for games, and the status of their objects.).

Here is a 1000-word essay that tackles each of these questions: https://1000wordphilosophy.com/2021/09/26/videogames-and-philosophy/

The Paradox of Horror

Join us for a SpOoKy edition of the Strange Philosophy Thing tomorrow, Halloween (10/31), at 4:30 p.m. for refreshments and some fun, informal philosophy talk. Grab a treat from our candy stash and get ready to discuss:

Topic: The Paradox of Horror

Consider the following three claims:

1. Horror stories and movies cause negative emotions in the audience (e.g., fear, disgust).

2. We want to avoid things that cause negative emotions.

3. We do not want to avoid horror stories and movies.

Though each of these claims seems intuitively plausible, they cannot all be true. For example, if claim 1 is true and horror causes negative emotions, and if claim 2 is true and we want to avoid things that cause negative emotions, then it seems to follow that we would want to avoid horror. But that’s inconsistent with claim 3. On the other hand, if, as claim 2 has it, we want to avoid things that cause negative emotions, and if, as claim 3 has it, we do not want to avoid horror, then, presumably, horror doesn’t cause negative emotions. But that’s inconsistent with claim 1. So, it seems that, in order to avoid inconsistency, we must reject (or modify) one of these claims. Which should we reject?

You need not prepare in anyway in order to attend the Strange Thing discussion. However, if you’re interested, there’s a wealth of info on the paradox of horror available from across the web. Here’s a short video on the topic by, perhaps, the premier philosopher of horror, Noël Carroll. We discussed this topic back in 2017 and Professor Armstrong provided a great write-up here. And, finally, for a look at the neuroscience of horror, check out this recent audio interview with Nina Nesseth, author of the book, Nightmare Fuel: The Science of Horror Films.

See you tomorrow! And, why not bring a friend, to kick off All Hallows’ Eve in style, with philosophy!

Post-Theory Science

Join the philosophy faculty and other interested parties for the next installment of the 22–23 the Strange Philosophy Thing. The Strange Thing meets in the Strange Lounge (room 103) of Main Hall. We gather there most Mondays of the fall and winter terms from 4:30–5:30 for refreshments and informal conversation around a given topic. It’s a lot of fun!

I take my inspiration for this week’s Strange Thing from this article from The Guardian, by Laura Spinney. Reading it is optional. I’ll hit the most important points, and highlight the most important questions the article raises, quoting some useful passages along the way.

A traditional and familiar strategy for carrying out scientific research is to explain a type of phenomenon by formulating a theory—a hypothesis—which predicts some particulars of that type of phenomenon, and run experiments to confirm those predictions are accurate. Newton, for example, hypothesized that gravity operated in the absence of air resistance according to his famous inverse square law. And sure enough, he was able to derive Kepler’s empirically well-confirmed laws of planetary motion from it. It’s easy to miss the fact that a lot of scientific practice—especially recently, with the use of AI—differs drastically from this picture. As Spinney notes,

“Facebook’s machine learning tools predict your preferences better than any psychologist [could]. AlphaFold, a program built by DeepMind, has produced the most accurate predictions yet of protein structures based on the amino acids they contain. Both are completely silent on why they work: why you prefer this or that information; why this sequence generates that structure.”

The reason they are silent on why they work is due to the opaqueness of what is going on inside the neural nets which generate the predictions from ever-growing datasets.

Spinney grants that some science is, at this stage at least, merely assisted by AI. She notes that Tom Griffiths, a psychologist at Princeton, is improving upon Daniel Kahneman and Amos Tversky’s prospect theory of human economic behavior by training a neural net on “a vast dataset of decisions people took in 10,000 risky choice scenarios, then [comparing it to] how accurately it predicted further decisions with respect to prospect theory.” What they found was that people use heuristics when there are too many options for the human brain to compute and compare the probabilities of. But people use different heuristics depending on their different experiences (e.g., a stockbroker and a teenage bitcoin trader). What is ultimately generated via this process doesn’t look much like a theory but, as Spinney describes, ‘a branching tree of “if… then”-type rules, which is difficult to describe mathematically’. She adds,

“What the Princeton psychologists are discovering is still just about explainable, by extension from existing theories. But as they reveal more and more complexity, it will become less so – the logical culmination of that process being the theory-free predictive engines embodied by Facebook or AlphaFold.”

Of course there is a concern to be noted concerning bias in AI, particularly those that are fed small or biased data sets. But can’t we expect the relevant data sets eventually to become so large that bias isn’t an issue, and thus accuracy of their predictions abounds? Will science look more and more like this in the future? What does that mean for the role of human beings in science?

This last question can be addressed with the help of the notion of interpretability. Theories are interpretable. They involve the postulation of entities and relations between them, which can be understood by human beings, and that explain and predict the phenomena under investigation. But AI-based methods of prediction preclude interpretability due to the opaqueness of the AI processes by which they are generated. Human’s don’t observe the process of the weights of the nodes of the neural net changing as new data is fed in. AI-based science appears to prevent us from having an explanation of why scientific predictions are accurate, and thus presumably precludes us from providing one another with explanations of the phenomena under investigation. There are at least two sorts of reasons to be leery of non-interpretability. One is practical, the other theoretical.

On the practical side, Bingni Brunton and Michael Beyeler, neuroscientists at the University of Washington, Seattle, noted in 2019 that “it is imperative that computational models yield insights that are explainable to, and trusted by, clinicians, end-users and industry”. Spinney notes a good example of this:

‘Sumit Chopra, an AI scientist who thinks about the application of machine learning to healthcare at New York University, gives the example of an MRI image. It takes a lot of raw data – and hence scanning time – to produce such an image, which isn’t necessarily the best use of that data if your goal is to accurately detect, say, cancer. You could train an AI to identify what smaller portion of the raw data is sufficient to produce an accurate diagnosis, as validated by other methods, and indeed Chopra’s group has done so. But radiologists and patients remain wedded to the image. “We humans are more comfortable with a 2D image that our eyes can interpret,” he says.’

On the theoretical side, is such understanding important for its own sake, in addition to or solely because of practical considerations like the one mentioned above? Spinney suggests that AI-based science circumvents the need for human creativity and intuition.

“One reason we consider Newton brilliant is that in order to come up with his second law he had to ignore some data. He had to imagine, for example, that things were falling in a vacuum, free of the interfering effects of air resistance.”

She adds,

‘In Nature last month, mathematician Christian Stump, of Ruhr University Bochum in Germany, called this intuitive step “the core of the creative process”. But the reason he was writing about it was to say that for the first time, an AI had pulled it off. DeepMind had built a machine-learning program that had prompted mathematicians towards new insights – new generalisations – in the mathematics of knots.’

Is this reason in itself to be leery of AI-based science? Because it will reduce the opportunity for creative expression and the exercise of human intuition? Would it do this?

Hope to see you Monday for discussion with the philosophy department and refreshments!