If a random sample of the population is reading this post, then more than 10% of you are saying “why, yes. Yes it is!”

What about the other 90% of you? Hmmm, let me see here — How about 1111? 0000? 1212? 7777?

Those next four numbers should account for another 10% of you, so those five account for one in every five (20%) of all four-digit passwords. That is, the numbers that protect your savings and checking accounts, among other things, from would-be interlopers.

Jeepers.

It turns out that only 426 codes account for more than half of all passwords. If passwords were distributed randomly, it would take 5,000, of course. So someone who nicks your debit card has a pretty good chance of cracking your code just by going through a list of “easy to remember” numbers.

How do I know all of this?

The crew over at the Data Genetics blog got a hold of 3.4 million passwords from breached databases, and took a look at the frequency of various numbers in a mind-boggling array of ways. Very cool post. And, for most of you, probably time to change your password.

For those 0.000744% of you with 8068 — the least common password — it’s probably time to change your password, too. Once people see that it is the least common, they will pick it and it wont be least common any more. Oh, 2727.

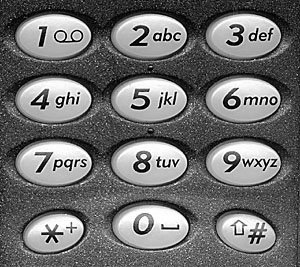

2580 comes in at #22. Can you see why?